Project Center in Trichy - Power Integrated

ARMOUR PROJECT

The ARMOUR project is focused on carrying out a largescale experimentally-driven research. To achieve it, a proper

experimentation methodology will be implemented,

technologies subject to experimentation will be benchmarked

and a new certification scheme will be designed in order to

perform a quality label for proposed solutions. Also, security

and trust of large-scale IoT applications will be studied to

establish a design guidance for developing applications that are

secure and trusted in the large-scale IoT. Finally, data and

benchmarks from experiments will be properly handled,

preserved and made available via FIESTA IoT/Cloud

infrastructure1

.

For this purpose, this facility will be adapted and

configured to hold the ARMOUR experimentation data and

benchmarks. In this way, research data are properly preserved

and made available to the research communities, making

possible to compare results of experiments performed in

different testbeds and/or also to confront results of disparate

security and trust technologies.

A. Experimentation methodology

The ARMOUR project considers a large-scale

experimentally-driven research approach due to IoT

complexity (high dimensionality, multi-level interdependencies

and interactions, non-linear highly-dynamic behavior, etc.).

This research approach makes possible to experiment and

validate research technological solutions in large-scale

conditions and very close to real-life environments. Besides, it

is performed through a well-established methodology for

conducting good experiments that are reproducible, extensible,

applicable and revisable. Thus, the used methodology aims at

checking the repeatability, reproducibility and reliability

conditions to ensure generalization of experimental results as well as verifying their credibility. This methodology consists of

four phases which are depicted below.

The first phase, Experimentation Definition & Support,

marks the start of the experimentation process and involves:

• Definition of the IoT security and trust experiments

(testing scenarios, needed conditions, analysis

dimensions) and the technological architecture for

ARMOUR experimentation;

• Research and development of the ARMOUR

technological experimentation suite and benchmarking

methodology for executing, managing and

benchmarking large-scale security and trust IoT

experiments;

• Analysis of the FIT IoT-LAB testbed [9] and FIESTA

IoT/Cloud platform for evaluating their composition,

supports and services from the perspective of

ARMOUR experimentation.

In the next phase, Testbeds Preparation & Experimentation

Set-up, the conditions for conducting the ARMOUR

experimentation using the selected testbeds are established as

well as preparing the IoT security and trust experiments. This

phase involves:

Project Center in Trichy • Extend, adapt and configure the FIT IoT-LAB testbed

with the ARMOUR experimentation suite to enable

IoT large-scale security and trust experiments from FIT

IoT-LAB and FIESTA IoT/Cloud testbeds;

• Prepare the FIESTA IoT/Cloud platform to hold data

set from ARMOUR experiments enabling researchers

to perform security and trust oriented experiments,

generate new datasets and perform benchmark;

• Setting-up and preparing the ARMOUR experiments

by specifying the security and trust test patterns for the

experimentation that will be used to execute and to

manage such experiments.

The third phase, Experiments Execution, Analysis and

Benchmark, represents the research core of the ARMOUR

project to achieve proven security and trust solutions for the

large-scale IoT. This phase involves the following sub-phases

which are performed iteratively:

• Configure. Install and configure the scenarios of the

IoT large-scale security and trust experiments.

• Measure. Take measurements and collect the data from

experiments.

• Pre-process. Perform pre-processing of stored

experimentation data (e.g. data-cleaning) as well as

organize them (e.g. transformations, semantic

annotations).

• Analyse. Analyse experimentation data (prove

hypothesis), perform experiments benchmarking and

compare the performance.

• Report. Inform on experiments results and possibility

to publish them for project dissemination.

The last phase of this methodology, Certification/Labelling

& Applications Framework, is focused on the creation of the

certification label and the establishment of a framework for

secure and trusted IoT applications. It involves:

• Develop a new labelling scheme for large-scale IoT

security and trust solutions that provides the user and

market confidence needed on their deployment,

adoption and use.

• Define a framework to specify how the different

security and trust solutions can be used to support the

design and deployment of secure and trusted

applications for large-scale IoT.

B. Experimentation execution

The ARMOUR experiments will be executed on a largescale IoT facility: the FIT IoT-LAB testbed. This facility has

been enhanced for supporting secure and trusted

experimentation and offers a testbed (more than 2700 wireless

IoT nodes) for developing and deploying large-scale IoT

experiments. In addition, services already deployed, like

monitoring or sniffer, and advanced services developed in the

ARMOUR context, like replay or denial traffic injection, will

allow to carry out large-scale IoT experiments in the domain of

security and trust. Thus, examples of experiments that could be

performed include:

1. End-to-end connectivity between IoT nodes using

protocols like IPv6SEC, CoAP [10] / DTLS [11] or

6LoWPAN [12] and to test secure solutions using

standard IETF protocols on different HW/OS.

2. Test specific secure mac layer or low-level

cryptographic under very stressful environment where

attacker could inject or try to replay certain data traffic.

Also, the sniffer service could be used intensively in

the test.

C. Secured and trusted applications for large-scale

IoT

Nowadays, there are many areas of people's life in which

IoT applications can be extended. Specifically, within the

ARMOUR project the following application domains will be

considered. The first one would be “remote healthcare” in

which the status of the patient is continuously monitored and

data is provided to the remote healthcare centre. Thus, doctors

can apply certain corrective actions when there is a change of

status. In this context, privacy risks should be investigated to

ensure that confidential data is not distributed or eavesdropped

by malicious entities. To address these risks, it is necessary that

IoT applications implement various solutions, such as defining

different roles with different levels of security for data access

(e.g. RBAC) or apply cryptographic mechanisms to prevent

security risks are become safety risks.

Another domain is “business from home (teleworking)”. In

this one, workers are connected to various remote centres and

exchange information for business purposes. Because of this, it

is required that IoT applications provide appropriate

authentication mechanisms and ensure confidentiality to protect

business data and ensure that only authenticated and authorized

remote users can access to services business.

The “integration with Smart City” is also considered and

involves applications like Intelligent Transport Systems (ITS)

or e-Government which could be integrated in a Smart Home

environment. This integration between multiple heterogeneous

systems and the definition of different levels of security are the

main aspects in this context.

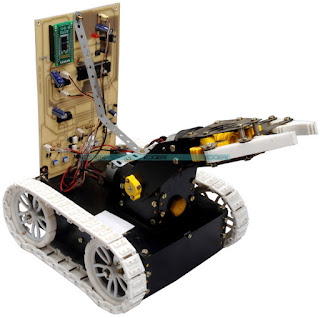

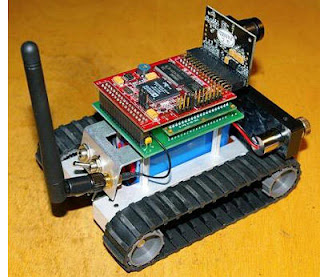

Finally, “smart mobile devices” are taken into account and

include applications related to the deployment of IoT mobile

devices in various contexts (e.g. shopping centre, logistics

centre) like robotic systems for cleaning or maintenance and

production systems. In this case, it is necessary to prevent

certain hazards, disclosure of confidential information and

ensure that related coordinated operations cannot be disrupted

by malicious attackers.

D. Experimentation data and benchmarks

In ARMOUR, experimentation research data are mainly

represented as data sets. These data sets may be preserved in

different ways, where it may be imposed different data access

or usage policies. ARMOUR foresees the usage of data

repositories but especially FIESTA-IoT platform to store,

archive and preserve these data sets.

FIESTA-IoT differs from typical data repositories by

allowing the execution of experiments over the stored data sets.

Moreover, FIESTA-IoT provides itself as a channel to give

data access to experimenters and researchers, either from FIRE

(Future Internet Research Experimentation) community or

other research communities.

ARMOUR datasets will endow

FIESTA-IoT with the capability to perform security oriented

experiments. At the same time, experimenters can use platform

capabilities to run specific data processing algorithms to used,

transform data sets and generate new ARMOUR data sets, and

to establish benchmarks to rank and compare results from

different experiments and IoT security and trust technological

solutions.

| https://powerintegrated.in/ |

EXPERIMENTS’ DESCRIPTION

Once the main aspects of the ARMOUR project have been

detailed, in this section we present the design of the proposed

experiments on which several tests will be carried out in order

to verify the security and trust that present in the IoT context.

Specifically, three experiments, corresponding to the

bootstrapping, group sharing and software programming

stages, in order to solve or mitigate those threats that may arise

in each of these stages are proposed. The first experiment is

focused on providing a mechanism that allows devices to

authenticate and to request authorization to publish information

in an IoT platform. Moreover, the second experiment is

intended to define a mechanism for secure information

exchange between groups of devices through the platform.

Finally, the third experiment focuses on the security

mechanisms that ensure that both the programed device and

programming entity are legitimate.

Before presenting the design of the experiments, it is

appropriate to briefly describe the entities that will appear in

them. Such entities model different types of devices that are

part of the IoT world.

• Smart Object or Device. It is a device with constrained

capabilities in terms of processing power, memory and

communication bandwidth (sensors, actuators, etc.). In

the bootstrapping stage, Smart Objects act both PANA

Client (PaC) [13] and Data Producer, that is, it tries to

get access to the network to publish subsequently

certain information in an IoT platform. On the other

hand, in the group sharing stage, it can act as Data

Producer (publishing data on the IoT platform) or as

Data Consumer (receiving data from the IoT platform).

• Gateway. It allows Smart Objects are able to

communicate with the Internet. In bootstrapping stage,

it presents the functionality of a PANA Authentication

Agent (PAA) being responsible for authenticating and

authorizing the network access to different Smart

Objects acting as PaCs.

• AAA Server. Its functionality is to manage a very large

number of devices in terms of authentication and

authorization. In a particular way, in the bootstrapping

stage, it is responsible for the management of Smart

Objects that need to be authenticated and authorized to

access the IoT network.

• Policy Decision Point (PDP). It is the component of

the policy-based access control system that takes the

determination of whether or not to authorize a subject

to perform an action over a resource, evaluating its

access control policies. In the bootstrapping stage, it is

responsible for taking the decision to allow or not a

Smart Object acting as Data Producer to publish

information on an IoT platform.

• Capability Manager. It generates authorization tokens

for different Smart Objects. In the bootstrapping stage,

interacts with the PDP to get authorization decisions

and to generate capability tokens accordingly.

• Attribute Authority. It issues and manages private keys.

In the group sharing stage, it calculates the CP-ABE

key [14] associated with the set of attributes of the

Smart Object that request it.

• Pub/Sub Server. It allows carrying out the registration,

update and query of data, as well as sending

notifications when changes on the registered

information take place. In both stages (bootstrapping

and group sharing), the Pub/Sub Server receives

information publications from a certain Data Producer

and sends notifications with updated data to set of

subscribed Data Consumers.

• Provisioning Server (PS). Repository that stores the

software images meant to be installed on devices. This

server is also responsible for the validation of the

identity of devices that request software updates.

A. Bootstrapping experiment

Figure 1 shows the bootstrapping experiment.

It begins with

the network access phase, in which the Smart Object, acting as

PaC, and AAA Server exchange EAP messages [15] in order to

verify the identity of the first. To do this, a RADIUS-based

AAA infrastructure with pass-through configuration is used.

Note that the number of EAP messages exchanged between

these two entities depends on the authentication method used

(EAP-TLS, EAP-MD5, EAP-AKA, etc.). From the result of authentication (Accept / Reject), the Gateway, acting as PAA,

will deny or allow network access to that Smart Object (PaC).

Once the network access phase has been successfully

completed, the phase for obtaining the capability token starts.

In this way, the Smart Object initiates the communication with

the Capability Manager to request the token. The latter, before

generate it, need to know if the Smart Object (subject) is able

to publish (action) on the platform (resource). Thus, the

Capability Manager sends to the PDP a XACML request [16]

and then, it evaluates its access control policies and takes an

authorization decision. In the event that the decision is

PERMIT, the Capability Manager generates and sends the

capability token to the Smart Object, which allows publishing

data on the IoT platform by the latter.

Power Integrated When the Smart Object is in possession of the capability

token, the data publication phase begins. In this phase, the

Smart Object, acting as Data Producer, communicates with the

Pub/Sub Server in order to publish information on the IoT

platform, attached the token obtained in the previous phase.

When receiving the request, the Pub/Sub Server verifies the

capability token and, if such verification is successful, the

information is published.

B. Group sharing experiment

The experiment for the group sharing stage is based, is

shown in Figure 2.

As observed, the experiment begins with the phase in which

Smart Objects obtain their private keys. In this phase, different

Smart Objects that will act as Data Consumers, request to

Attribute Authority the corresponding CP-ABE key.

Note that, in that request, Data Consumers include their

certificate (X.509 or attributes certificate) so that the Attribute

Authority can extract from it the set of attributes of such Data

Consumers and generate the corresponding CP-ABE keys for

them.

The subscription phase starts once the keys have been

received by the Data Consumers. In this phase, the Data

Consumers send subscription requests to the IoT platform for a

given topic, such as humidity or temperature. Thus, when a

Smart object acting as Data Producer changes the topic value,

the subscribed Data Consumers will receive a notification with

the updated information.

Next, the Data Producer initiates the publication and

notification phase. Such Data Producer, before to publish the

information on the platform, uses the CP-ABE cryptographic

scheme to encrypt data under a policy of identity attributes (e.g.

atr1 || (atr2 && atr3)). Once encrypted information, the Data

Producer sends it to the Pub/Sub Server to publish it over a

given topic. When the Pub/Sub Server receives the message, it

checks if it has subscriptions registered of Data Consumers for

that topic. Then, for each of the subscribed Data Consumer, the

Pub/Sub server sends them a notification with the new

encrypted information associated with that topic. Finally, when

a Data Consumer receives the data, it tries to decrypt them

using its CP-ABE private key (it was obtained in phase 1),

which is associated with the set of attributes of such Data

Consumer. So, if its set of attributes satisfies the policy used in

the encryption process, the information will be revealed. Note

that all Data Consumers able to decrypt the data form a group

with dynamic and ephemeral trust relationships between such

entities.

C. Software programming experiment

Figure 3 depicts the interactions between entities that take

part of the software update stage experiment. Here, the

Provisioning Server (PS) receives a new software image to be

executed by devices. The possible security issues related with

this step are out of the scope of this testing. After receiving a

new software image, the PS announces it to all devices in the

network by the means of broadcast/multicast messages. When a

device receives the announcement, it may decide to request a

software update and sends a Software Access Request (step 3),

starting the authentication process. This authentication protocol

is strongly inspired on the Extensible Authentication Protocol

(EAP) and includes some adaptations to improve the efficiency

based on the conditions of the testing scenario.

In the Software Access Request message, the device sends

its identification information which allows the receiver to

identify its hardware and the current version of the software

that is running at the device. When the PS receives this

message, it tries to validate the Device ID by, for instance,

consulting registry tables or running validation algorithms over

the ID. If the Device ID is considered valid, then the PS will

send a challenge to the device. An exchange of challenges

occurs to allow the PS to prove its identity to the device and the

latter to prove that the software, which it is running, is

legitimate.

To achieve this purpose, a Keyed-Hashing for Message

Authentication (HMAC) mechanism is to be used where

expressions and software fingerprint information is combined

using a Hashing algorithm. Software fingerprints can be

obtained from the execution of specific functions that collect

and process metrics from the software, or can consist in a

message/code embedded within the software that can be

obtained using specific methods. Software fingerprints are

meant to be used to verify the authenticity of the software

because fingerprints are supposed to change whenever the

software is changed. Therefore, only the entities that know the

fingerprint information of the software version being used will

be able to produce the hash code. When the device informs the

PS which software version it is running, then the PS can search

in its database which fingerprint to use in the challenge

process.

If the challenge process was successful, then a secure

connection can be established and the software image is

transferred from the PS to the device through an encrypted

channel. In the whole process neither the software nor the

fingerprints should be exchanged using unprotected

communication channels. Different encryption algorithms,

hashing functions, and software fingerprinting methods will be

tested in order to identify the one(s) that provide the best

security and energy performances.

| https://www.facebook.com/Power-Integrated-Solutions-416076345230977/?ref=page_internal |